Test in production without watermarks.

Works wherever you need it to.

Get 30 days of fully functional product.

Have it up and running in minutes.

Full access to our support engineering team during your product trial

Effectiveness and efficiency are critical in the fields of online scraping and document generation. A smooth integration of strong tools and frameworks is necessary for extracting data from websites and its subsequent conversion into documents of a professional caliber.

Here comes Scrapy, a web scraping framework in Python, and IronPDF, two formidable libraries that work together to optimize the extraction of online data and the creation of dynamic PDFs.

Developers can now effortlessly browse the complex web and quickly extract structured data with precision and speed thanks to Scrapy in Python, a top web crawling and scraping library. With its robust XPath and CSS selectors and asynchronous architecture, it's the ideal option for scraping jobs of any complexity.

Conversely, IronPDF is a powerful .NET library that supports programmatic creation, editing, and manipulation of PDF documents. IronPDF gives developers a complete solution for producing dynamic and aesthetically pleasing PDF documents with its powerful PDF creation tools, which include HTML to PDF conversion and PDF editing capabilities.

This post will take you on a tour of the smooth integration of Scrapy Python with IronPDF and show you how this dynamic pair transforms the way that web scraping and document creation are done. We'll show how these two libraries work together to ease complex jobs and speed up development workflows, from scraping data from the web with Scrapy to dynamically generating PDF reports with IronPDF.

Come explore the possibilities in web scraping and document generation as we use IronPDF to fully utilize Scrapy.

The asynchronous architecture used by Scrapy enables the processing of several requests at once. This leads to increased efficiency and faster web scraping speeds, particularly when working with complicated websites or big amounts of data.

Scrapy has strong Scrapy crawl process management features, such as automated URL filtering, configurable request scheduling, and integrated robots.txt directive handling. The crawl behavior can be adjusted by developers to meet their own needs and guarantee adherence to website guidelines.

Scrapy allows users to navigate and pick items within HTML pages using selectors for XPath and CSS selectors. This adaptability makes data extraction more precise and dependable by enabling developers to precisely target particular elements or patterns on a web page.

Developers can specify reusable components for processing scraped data before exporting or storing it using Scrapy's item pipeline. By performing operations like cleaning, validation, transformation, and deduplication, developers can guarantee the accuracy and consistency of the data that has been extracted.

A number of middleware components that are pre-installed in Scrapy offer features like automatic cookie handling, request throttling, user-agent rotation, and proxy rotation. These middleware elements are simply configurable and customizable to improve scraping efficiency and address typical issues.

By creating custom middleware, extensions, and pipelines, developers can further personalize and expand the capabilities of Scrapy thanks to its modular and extensible architecture. Because of its adaptability, developers may easily include Scrapy in their current processes and modify it to meet their unique scraping needs.

Install Scrapy using pip by running the following command:

pip install scrapyTo define your spider, create a new Python file (such as example.py) under the spiders/ directory. An illustration of a basic spider that extracts from a URL is provided here:

import scrapy

class QuotesSpider(scrapy.Spider):

name = 'quotes'

start_urls = ['xxxxxx.com']

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').get(),

'author': quote.css('span small.author::text').get(),

'tags': quote.css('div.tags a.tag::text').getall(),

}

next_page = response.css('li.next a::attr(href)').get()

if next_page is not None:

yield response.follow(next_page, self.parse)To set up the Scrapy project parameters like user-agent, download delays, and pipelines, edit the settings.py file. This is an illustration of how to change the user-agent and make the pipelines functional:

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# Set user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3'

# Configure pipelines

ITEM_PIPELINES = {

'myproject.pipelines.MyPipeline': 300,

}Starting with Scrapy and IronPDF requires combining Scrapy's robust web scraping skills with IronPDF's dynamic PDF production features. I'll walk you through the steps of setting up a Scrapy project below so that you can extract data from websites and use IronPDF to create a PDF document containing the data.

IronPDF is a powerful .NET library for creating, editing, and altering PDF documents programmatically in C#, VB.NET, and other .NET languages. Since it gives developers a wide feature set for dynamically creating high-quality PDFs, it is a popular choice for many programs.

PDF Generation: Using IronPDF, programmers can create new PDF documents or convert existing HTML elements such as tags, text, images, and other file formats into PDFs. This feature is very useful for creating reports, invoices, receipts, and other documents dynamically.

HTML to PDF Conversion: IronPDF makes it simple for developers to transform HTML documents, including styles from JavaScript and CSS, into PDF files. This enables the creation of PDFs from web pages, dynamically generated content and HTML templates.

Modification and Editing of PDF Documents: IronPDF provides a comprehensive set of functionality for modifying and altering pre-existing PDF documents. Developers can merge several PDF files, separate them into separate documents, remove pages, and add bookmarks, annotations, and watermarks, among other features, to customize PDFs to their requirements.

After making sure Python is installed on your computer, use pip to install IronPDF.

pip install IronPdfTo define your spider, create a new Python file (such as example.py) in the spider's directory of your Scrapy project (myproject/myproject/spiders). A code example of a basic spider that extracts quotes from Url :

class QuotesSpider(scrapy.Spider):

name = 'quotes'

#web page link

start_urls = ['http://quotes.toscrape.com']

def parse(self, response):

quotes = []

for quote in response.css('div.quote'):

Title = quote.css('span.text::text').get()

content= quote.css('span small.author::text').get()

# Generate PDF document

renderer = ChromePdfRenderer()

pdf=renderer.RenderHtmlAsPdf(self.get_pdf_content(quotes))

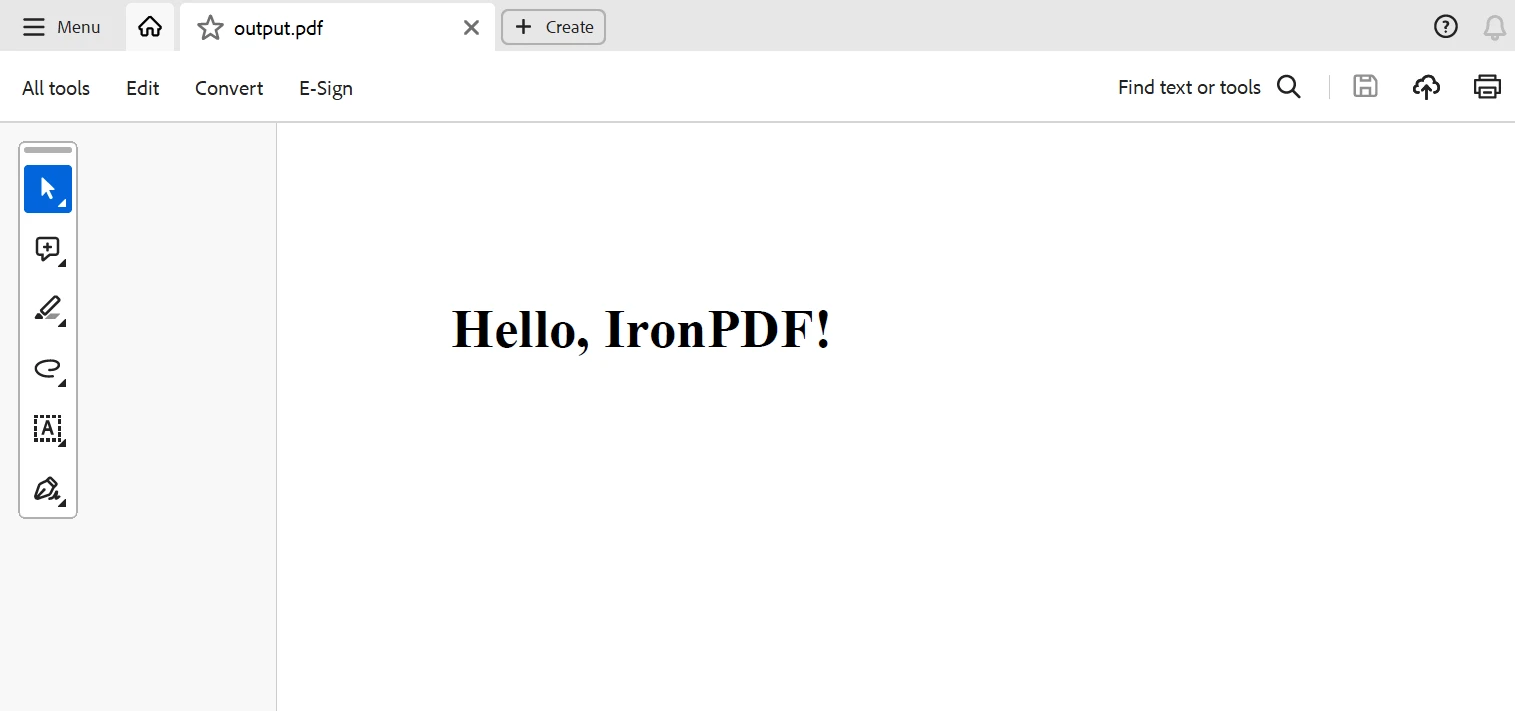

pdf.SaveAs("quotes.pdf")

def get_pdf_content(self, quotes):

html_content = "<html><head><title>"+Title+"</title></head><body><h1>{}</h1><p>,"+Content+"!</p></body></html>"

return html_contentIn the above code example of a Scrapy project with IronPDF, IronPDF is being used to create a PDF document using the data that has been extracted using Scrapy.

Here, the spider's parse method gathers quotes from the webpage and uses the get_pdf_content function to create the HTML content for the PDF file. This HTML material is subsequently rendered as a PDF document using IronPDF and saved as quotes.pdf.

To sum up, the combination of Scrapy and IronPDF offers developers a strong option to automate web scraping activities and produce PDF documents on the fly. IronPDF's flexible PDF production features together with Scrapy's powerful web crawling and scraping capabilities provide a smooth process for gathering structured data from any web page and turning it the extracted data into professional-quality PDF reports, invoices, or documents.

Through the utilization of Scrapy Spider Python, developers may effectively navigate the intricacies of the internet, retrieve information from many sources, and arrange it in a systematic manner. Scrapy's flexible framework, asynchronous architecture, and support for an XPath and CSS selector provide it the flexibility and scalability required to manage a variety of web scraping activities.

A lifetime license is included with IronPDF, which is fairly priced when purchased in a bundle. Excellent value is provided by the package, which only costs $749 (a one-time purchase for several systems). Those with licenses have 24/7 access to online technical support. For further details on the fee, kindly go to the website. Visit this page to learn more about Iron Software's products.